Bessel’s equation arises in countless physics applications and has the form

where the constant is known as the order of the Bessel function

which solves (1). The method of Frobenius can be used to find series solutions for

of the form

where is a number to be found by substituting (2) and its relevant derivatives into (1). We assume that

is not zero, so the first term of the series will be

. Successive differentiation of (2) and substitution into (1) produces for each power of

in (2) an equation equal to zero involving combinations of several coefficients

. (The total coefficient of each power of

must be zero to ensure the right-hand side of (1) ends up as zero). The equation for the coefficient of

is used to find the possible values of

and is known as the indicial equation. For the Bessel equation in (1), this procedure results in the indicial equation

so and we need to find two solutions, one for

and another for

. A linear combination of these two solutions can then be used to construct a general solution of (1). The Frobenius procedure also leads to

= 0 and the following recursion formula for the remaining coefficients:

We use this to find coefficients for first, and can then simply replace

by

to get the coefficients for

. Here, we focus only on the case

which upon substitution in (3) gives

Evaluating these coefficients with a starting value of results in solutions called Bessel functions of the first kind and order

, usually denoted by

. In the present note, I am concerned only with the Bessel function of the first kind and order zero,

, obtained by setting

. We then have a starting value

and we obtain the following coefficients in the power series for

:

and so on. So, leads to all odd-numbered coefficients being zero and we end up with the following generalized series expression for the Bessel function of the first kind and order zero:

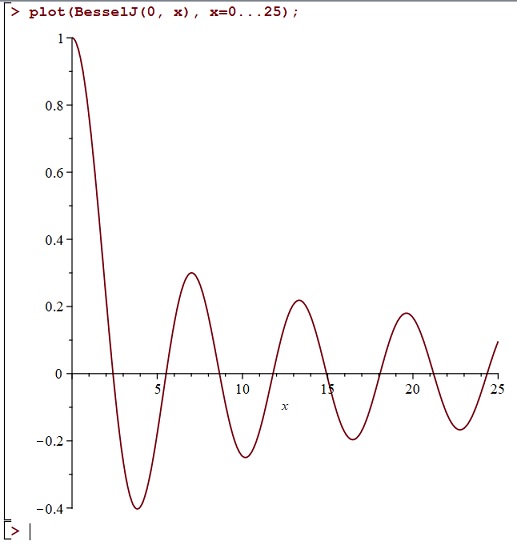

We can easily plot using MAPLE and it looks like a kind of damped cosine function. Here is a quick plot for positive values of

:

The series in (5) above is given, albeit in a more general complex variable setting, as equation (3) on page 16 in the classic work by G. N. Watson, 1922, A Treatise On The Theory of Bessel Functions, Cambridge University Press. (At the time of writing, this monumental book is freely downloadable from several online sites). In the present note, I am interested in certain integral formulae which give rise to the same series as (5). These are discussed in Chapter Two of Watson’s treatise, and I want to unpick some things from there. In particular, I am intrigued by the following passage on pages 19 and 20 of Watson’s book:

In the remainder of this note, I want to obtain the series for in (5) above directly from the integral in equation (1) in this extract from Watson’s book, adapted to the real-variable case with

. I also want to use Watson’s technique of bisecting the range of integration to obtain a clear intuitive understanding of other equivalent integral formulae for

.

Begin by setting and

in the integral in (1) in the extract to get

To confirm the validity of this, we can substitute into the Taylor series expansion for

, namely

to get

Now integrate both sides of (7) from to

, using a formula I explored in a previous note for integrating even powers of the sine function, namely

We obtain

so

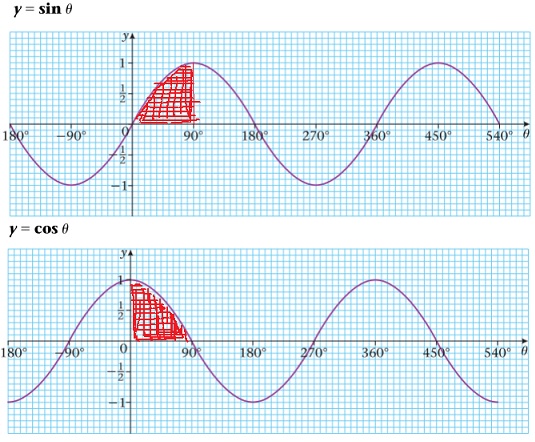

Thus, (6) holds. Notice that the integral in (6) is the average value of the integrand over the interval from to

. Looking at graphs of the sine and cosine functions, we can easily imagine that we can switch between the two and that this average also remains unchanged if we restrict the range of integration to the interval from

to

, and divide the integral by

instead of

.

Thus, it seems intuitively obvious that the following are equivalent integral formulae for :

In addition, if we substitute into the Taylor series expansion for

, namely

we get

But when we integrate both sides of this from to

, the odd terms on the right-hand side will vanish and the even terms will be the same as they were in the derivation of (9) above using the Taylor series for cos. We thus obtain another equivalent integral formula for

:

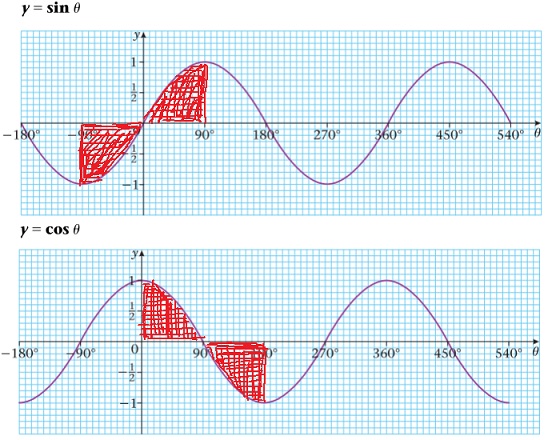

Furthermore, we can again restrict the range of integration in (12) and switch between sine and cosine as indicated in the following graphs:

Thus, we obtain the following equivalent integral formulae for :

We can imagine easily obtaining many other equivalent integral formulae for in this way.