For a lecture on deterministic and stochastic general epidemic models, I ran a Python simulation illustrating a threshold phenomenon which determines how an epidemic evolves. I want to record the results of this here.

In a general epidemic model, we assume a closed community of individuals and classify those individuals into three groups, those who are susceptible to disease, those who are infective, and those who are removed (i.e., who have had the disease and are no longer infective). The numbers of each type at time

are denoted by

,

and

respectively. Note that since

, the time evolution of

is automatically determined by the evolution of

and

. At any given time

, only two types of transition are possible. The first type of transition is SUSCEPTIBLE

INFECTIVE in which an infective meets a susceptible and the disease is passed on, causing the number of susceptibles to decrease by one and the number of infectives to increase by one. The second type of transition is INFECTIVE

REMOVED in which an infective recovers from the disease, so the total number of infectives decreases by one and the total number removed increases by one.

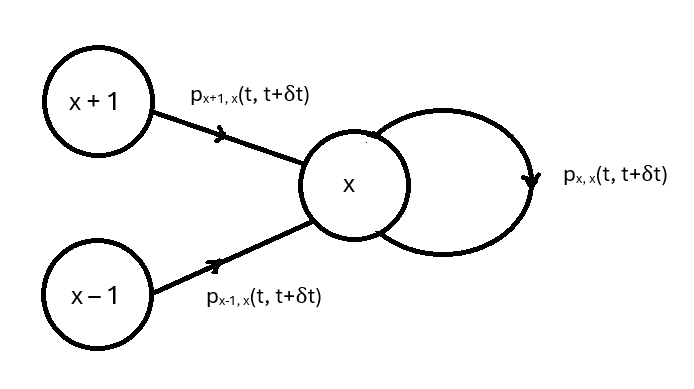

Assuming that each member of the community comes into contact with the remaining others according to a Poisson process with rate

, that there is homogenous mixing so that the probability of one member meeting any other particular member is

, and that a susceptible who becomes infected with the disease only remains infective for a time drawn from an exponential distribution with rate

, then the general epidemic as a stochastic process can be characterised by the following two probability statements corresponding to the two types of transitions outlined above:

The first probability statement says that infectives meet susceptibles according to a Poisson process with rate , while the second probability statement says that infectives recover and become removed according to a Poisson process with rate

, where

and

denote realised numbers of susceptibles and infectives at time

respectively.

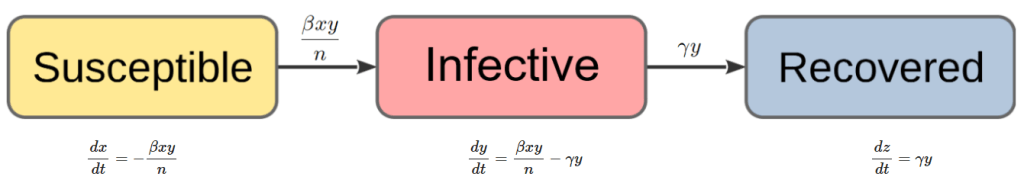

To obtain a deterministic approximation of the above stochastic general epidemic model, we set up ordinary differential equations for the time evolution of ,

and

based on the probability statements (1) and (2). From (1), we see that susceptibles

decrease at the rate

and infectives

correspondingly increase at the same rate, while from (2) we see that infectives also decrease at the rate

and the removed

correspondingly increase at the same rate. We thus get the following system of three coupled ordinary differential equations for the deterministic general epidemic model:

These cannot generally be solved to get explicit expressions for ,

and

as functions of time, but can be solved numerically to get time paths for these variables, as shown below using Python. It is also straightforward to obtain a differential equation for

in terms of

by dividing (4) by (3) to get

where

is a key parameter known as the epidemic parameter. The differential equation (6) can easily be solved given initial conditions ,

,

, to get an equation for

in terms of

:

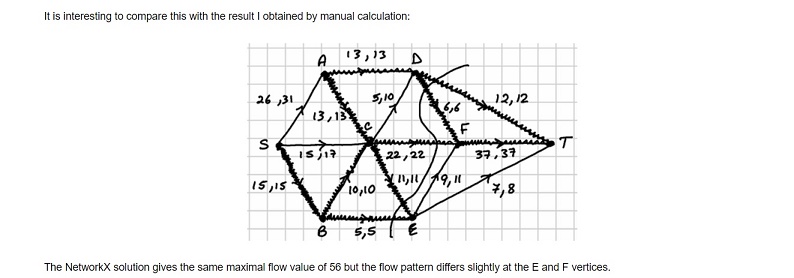

This equation describes the evolution of the epidemic in a `timeless’ way in the -plane and predicts that the epidemic parameter

plays a crucial role. It acts as a threshold parameter in the following sense: if

, the number of infectives never rises above the initial number

and in fact declines steadily from there until it reaches

at which point the epidemic ends (since there are no more infectives to pass on the disease), whereas if

, the number of infectives can rise significantly above

until it reaches a maximum value before beginning to decline from there until the epidemic ends again when

. Public health measures therefore aim to reduce the number of susceptibles

(e.g., by immunisation) and to increase the value of the epidemic parameter

by increasing the removal rate

and decreasing the contact rate

(e.g., by encouraging self-isolation) to try to achieve a scenario in which

.

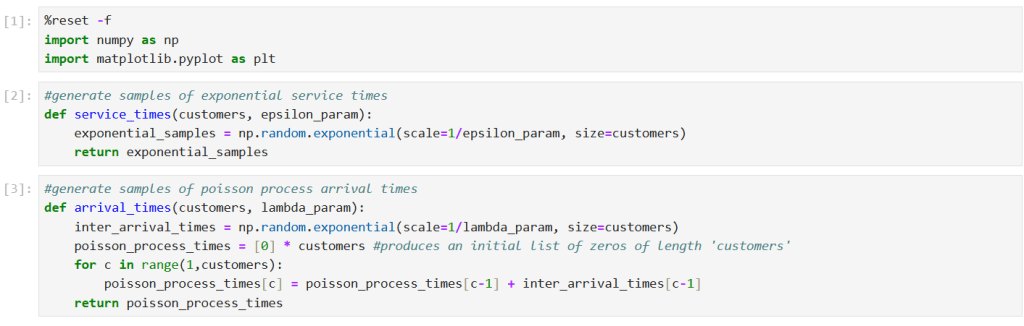

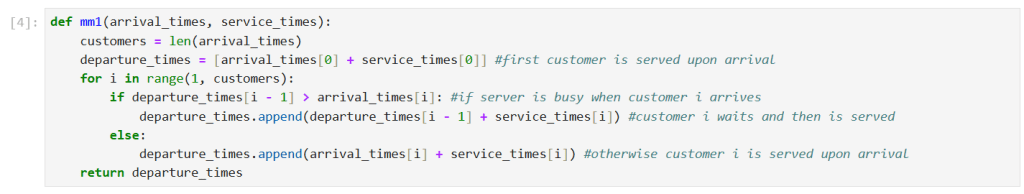

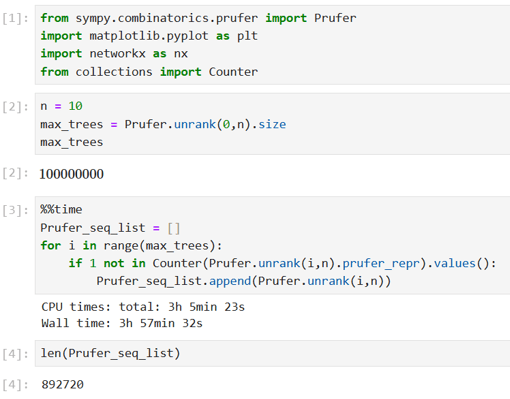

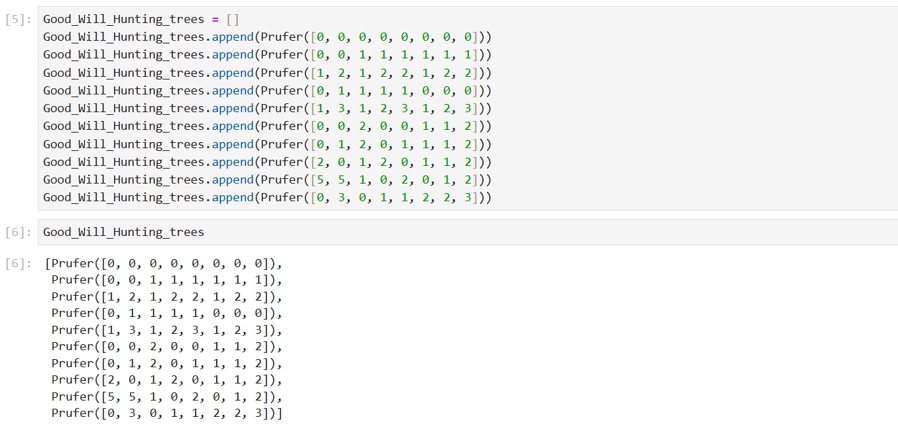

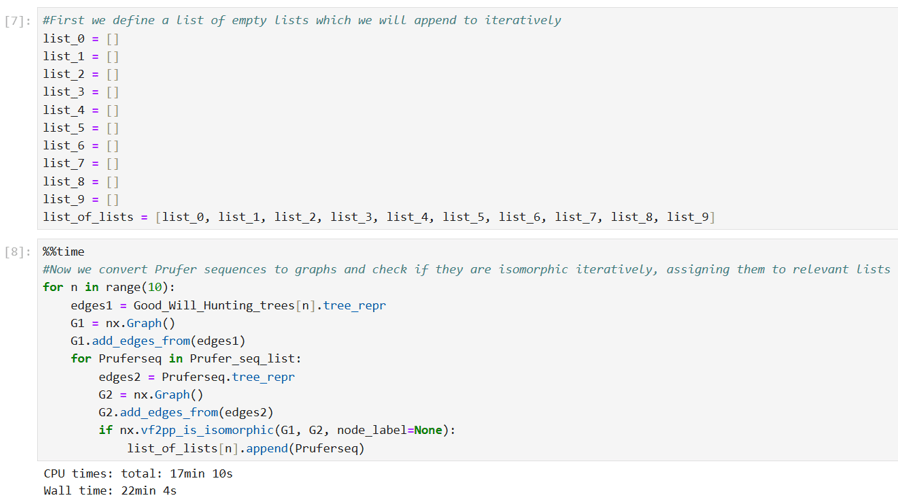

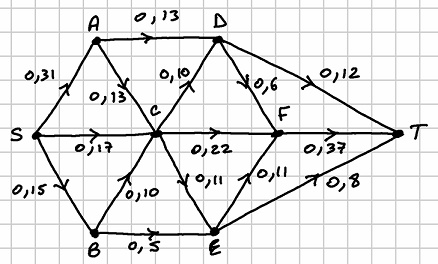

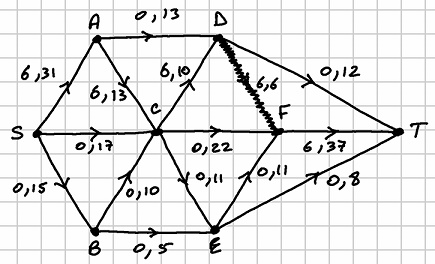

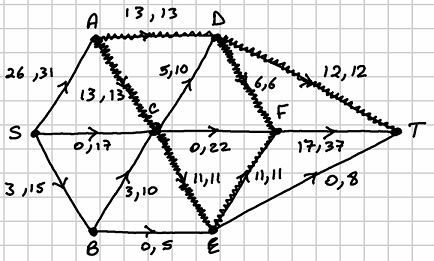

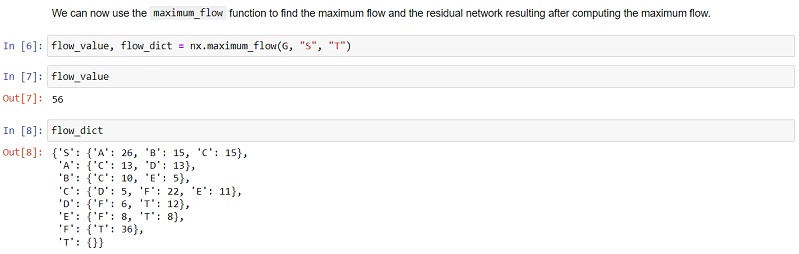

To supplement these results, I wanted to use Python to get time paths for ,

and

as direct numerical solutions to the coupled differential equations (3), (4) and (5), ideally also demonstrating the threshold phenomenon with these time paths. The following diagram summarises the deterministic general epidemic model to be implemented in Python:

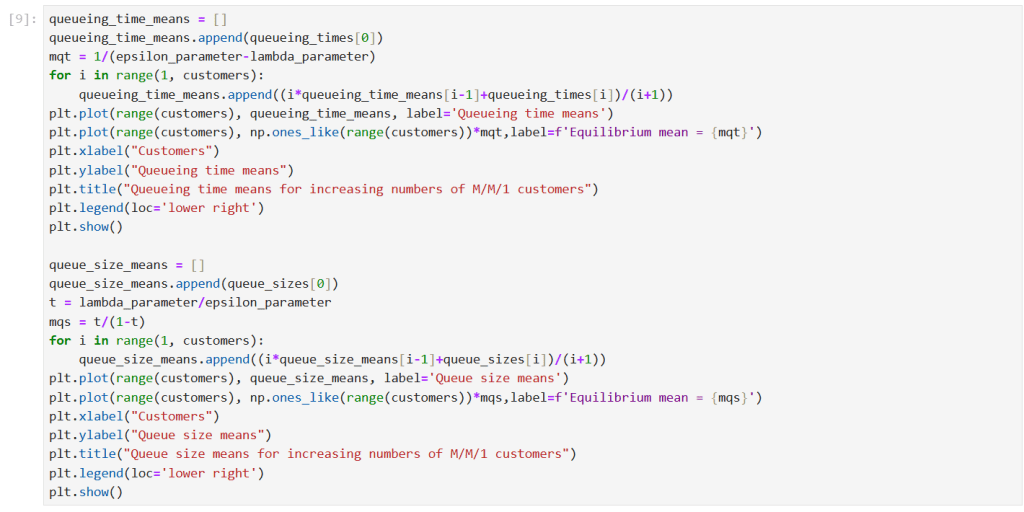

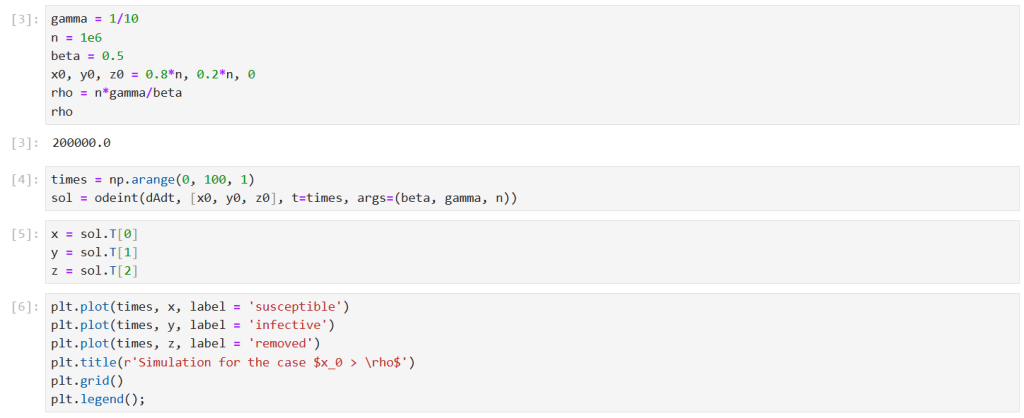

I used the odeint() function from the scipy.integrate library to solve the differential equation system numerically. This requires the definition of a Python function which takes as inputs ,

,

,

and

, and returns the vector derivative

.

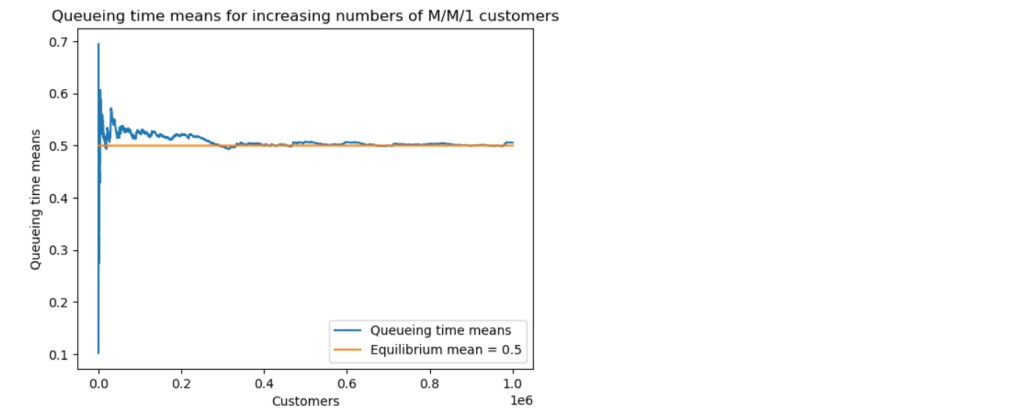

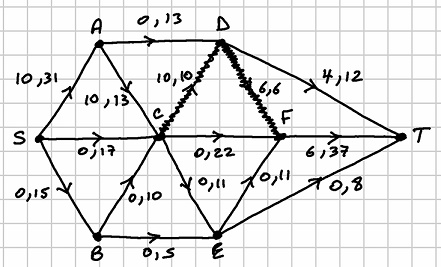

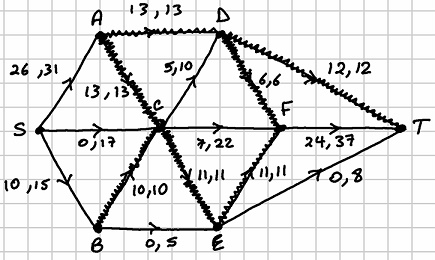

First, I ran a simulation for the case . I solved the model equations for

Note that the corresponding epidemic parameter is .

The results show the classic epidemic pattern for , with the number of infectives rising significantly above

before declining to

. Note that almost all the susceptibles ended up being infected in this scenario.

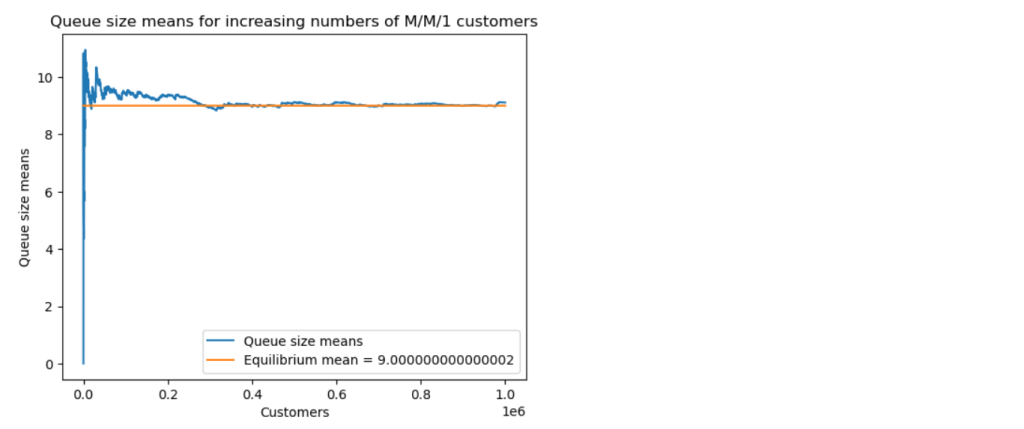

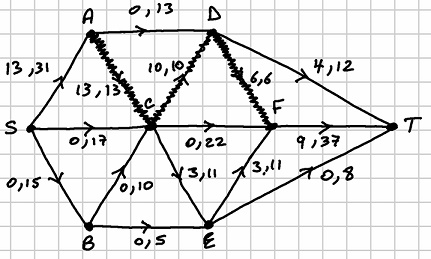

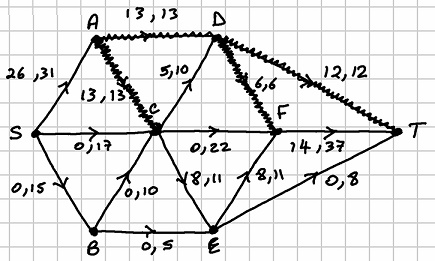

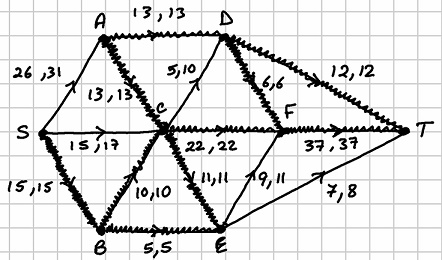

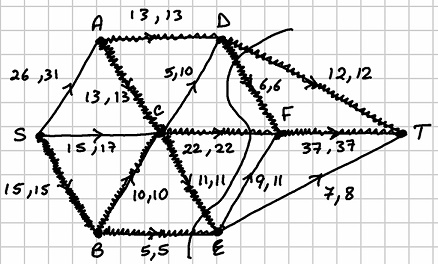

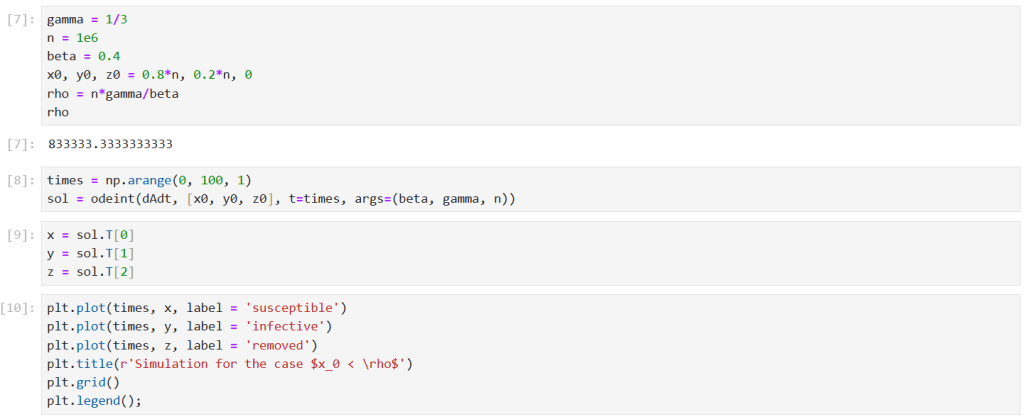

Next, I ran a simulation for the case . Suppose that public health measures have managed to increase the value of the epidemic parameter

by increasing the removal rate

and reducing the contact rate

. I solved the model equations for

Note that the corresponding epidemic parameter is now .

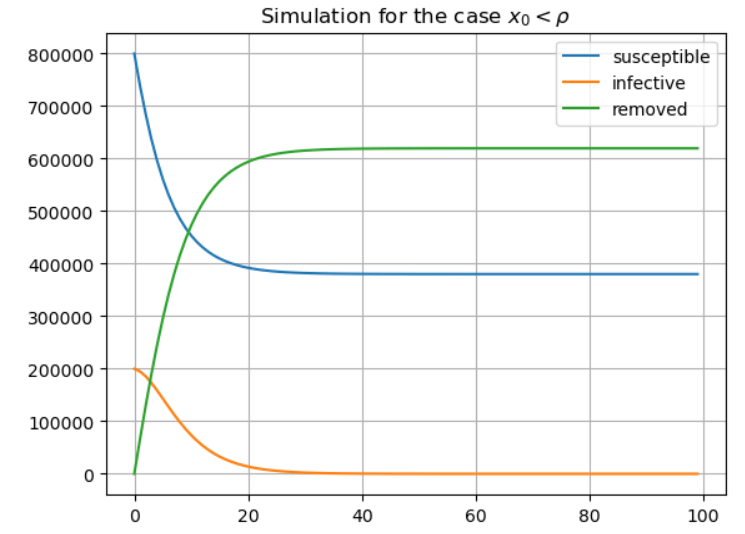

The results now show the typical results for , with the number of infectives never rising above the initial

, and declining steadily to

. Note that almost half of the initial number of susceptibles remained unaffected by the disease in this scenario.