In the present post I want to record some notes I made on the mathematical nuances involved in a proof of Noether’s theorem and the mathematical relevance of the theorem to some simple conservation laws in classical physics, namely, the conservation of energy and the conservation of linear momentum. Noether’s Theorem has important applications in a wide range of classical mechanics problems as well as in quantum mechanics and Einstein’s relativity theory. It is also used in the study of certain classes of partial differential equations that can be derived from variational principles.

The theorem was first published by Emmy Noether in 1918. An interesting book by Yvette Kosmann-Schwarzbach presents an English translation of Noether’s 1918 paper and discusses in detail the history of the theorem’s development and its impact on theoretical physics in the 20th Century. (Kosmann-Schwarzbach, Y, 2011, The Noether Theorems: Invariance and Conservation Laws in the Twentieth Century. Translated by Bertram Schwarzbach. Springer). At the time of writing, the book is freely downloadable online.

Mathematical setup of Noether’s theorem

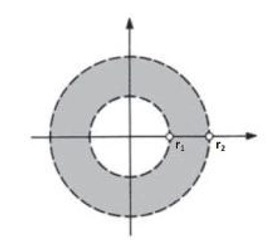

The case I explore in detail here is that of a variational calculus functional of the form

where is a single independent variable and

is a vector of

dependent variables. The functional has stationary paths defined by the usual Euler-Lagrange equations of variational calculus. Noether’s theorem concerns how the value of this functional is affected by families of continuous transformations of the dependent and independent variables (e.g., translations, rotations) that are defined in terms of one or more real parameters. The case I explore in detail here involves transformations defined in terms of only a single parameter, call it

. The transformations can be represented in general terms as

for . The functions

and

are assumed to have continuous first derivatives with respect to all the variables, including the parameter

. Furthermore, the transformations must reduce to identities when

, i.e.,

for . As concrete examples, translations and rotations are continuous differentiable transformations that can be defined in terms of a single parameter and that reduce to identities when the parameter takes the value zero.

Noether’s theorem is assumed to apply to infinitesimally small changes in the dependent and independent variables, so we can assume and then use perturbation theory to prove the theorem. Treating

and

as functions of

and Taylor-expanding them about

we get

where

and

where

for

.

Noether’s theorem then says that whenever the functional is invariant under the above family of transformations, i.e., whenever

for all and

such that

, where

and

, then for each stationary path of

the following equation holds:

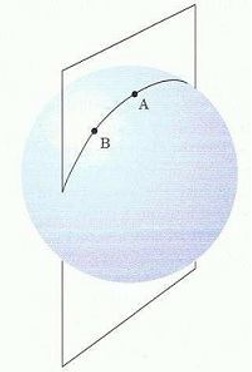

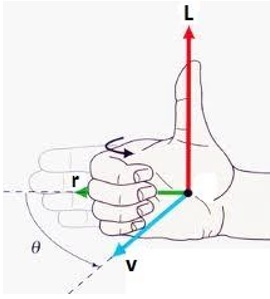

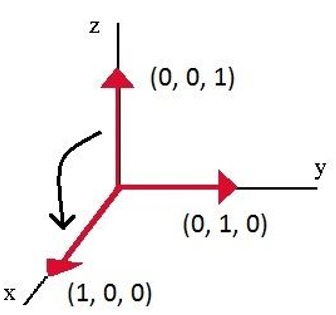

As illustrated below, this remarkable equation encodes a number of conservation laws in physics, including conservation of energy, linear and angular momentum given that the relevant equations of motion are invariant under translations in time and space, and under rotations in space respectively. Thus, Noether’s theorem is often expressed as a statement along the lines that whenever a system has a continuous symmetry there must be corresponding quantities whose values are conserved.

Application of the theorem to familiar conservation laws in classical physics

It is, of course, not necessary to use the full machinery of Noether’s theorem for simple examples of conservation laws in classical physics. The theorem is most useful in unfamiliar situations in which it can reveal conserved quantities which were not previously known. However, going through the motions in simple cases clarifies how the mathematical machinery works in more sophisticated and less familiar situations.

To obtain the law of the conservation of energy in the simplest possible scenario, consider a particle of mass moving along a straight line in a time-invariant potential field

with position at time

given by the function

. The Lagrangian formulation of mechanics then says that the path followed by the particle will be a stationary path of the action functional

The Euler-Lagrange equation for this functional would give Newton’s second law as the equation governing the particle’s motion. With regard to demonstrating energy conservation, we notice that the Lagrangian, which is more generally of the form when there is a time-varying potential, here takes the simpler form

because there is no explicit dependence on time. Therefore we might expect the functional to be invariant under translations in time, and thus Noether’s theorem to hold. We will verify this. In the context of the mathematical setup of Noether’s theorem above, we can write the relevant transformations as

and

From the first equation we see that in the case of a simple translation in time by an amount

, and from the second equation we see that

, which simply reflects the fact that we are only translating in the time direction. The invariance of the functional under these transformations can easily be demonstrated by writing

where the limits in the second integral follow from the change of the time variable from to

. Thus, Noether’s theorem holds and with

and

the fundamental equation in the theorem reduces to

Evaluating the terms on the left-hand side we get

which is of course the statement of the conservation of energy.

To obtain the law of conservation of linear momentum in the simplest possible scenario, assume now that the above particle is moving freely in the absence of any potential field, so and the only energy involved is kinetic energy. The path followed by the particle will now be a stationary path of the action functional

The Euler-Lagrange equation for this functional would give Newton’s first law as the equation governing the particle’s motion (constant velocity in the absence of any forces). To get the law of conservation of linear momentum we will consider a translation in space rather than time, and check that the action functional is invariant under such translations. In the context of the mathematical setup of Noether’s theorem above, we can write the relevant transformations as

and

From the first equation we see that reflecting the fact that we are only translating in the space direction, and from the second equation we see that

in the case of a simple translation in space by an amout

. The invariance of the functional under these transformations can easily be demonstrated by noting that

, so we can write

since the limits of integration are not affected by the translation in space. Thus, Noether’s theorem holds and with and

the fundamental equation in the theorem reduces to

This is, of course, the statement of the conservation of linear momentum.

Proof of Noether’s theorem

To prove Noether’s theorem we will begin with the transformed functional

We will substitute into this the linearised forms of the transformations, namely

and

for , and then expand to first order in

. Note that the integration limits are, to first order in

,

and

Using the linearised forms of the transformations and writing we get

Inverting the second equation we get

Using this in the first equation we find, to first order in ,

Making the necessary substitutions we can then write the transformed functional as

Treating as a function of

and expanding about

to first order we get

Then using the expression for above, the transformed functional becomes

Ignoring the second order term in we can thus write

Since the functional is invariant, however, this implies

We now manipulate this equation by integrating the terms involving and

by parts. We get

and

Substituting these into the equation gives

We can manipulate this equation further by expanding the integrand in the second term on the left-hand side. We get

Thus, the equation becomes

We can now see at a glance that the second and third terms on the left-hand side must vanish because of the Euler-Lagrange expressions appearing in the brackets (which are identically zero on stationary paths). Thus we arrive at the equation

which proves that the formula inside the square brackets is constant as per Noether’s theorem.