In this note I want to explore some of the details involved in the close analogy between:

A). the way Cantor constructed the real number field as the completion of the rationals using Cauchy sequences with the usual Euclidean metric; and

B). the way the p-adic number field can be similarly constructed as the completion of the rationals, but using Cauchy sequences with a different metric (known as an ultrametric).

I have found that exploring this analogy in some detail has allowed me to get quite a good foothold on some of the key features of p-adic analysis.

- A basic initial characterisation of p-adic numbers

A lot flows from the basic observation that given a prime number  and a rational number

and a rational number  , it is always possible to factor out the powers of

, it is always possible to factor out the powers of  in

in  as in the equation

as in the equation

with

The exponent  , known as the p-adic valuation in the literature, can be negative, zero or positive depending on how the prime

, known as the p-adic valuation in the literature, can be negative, zero or positive depending on how the prime  appears (or not) as a factor in the numerator and denominator of the rational number

appears (or not) as a factor in the numerator and denominator of the rational number  .

.

For example, suppose we specify the prime  . Then we can factor out the powers of

. Then we can factor out the powers of  in the rational numbers

in the rational numbers  ,

,  , and

, and  as

as

respectively.

For each prime number  , we can write any positive rational number

, we can write any positive rational number  in the power series form

in the power series form

where  is the p-adic valuation of

is the p-adic valuation of  and the coefficients

and the coefficients  come from the set of least positive residues of

come from the set of least positive residues of  . (These coefficients will always exhibit a repeating pattern in the power series of a rational number). This power series form is called the p-adic expansion of

. (These coefficients will always exhibit a repeating pattern in the power series of a rational number). This power series form is called the p-adic expansion of  . In the case

. In the case  , i.e., when

, i.e., when  is a positive integer, the p-adic expansion is just the expansion of

is a positive integer, the p-adic expansion is just the expansion of  in base

in base  .

.

If the rational number  is negative rather than positive, its p-adic expansion can be obtained from the positive version above as

is negative rather than positive, its p-adic expansion can be obtained from the positive version above as

where

and

for  .

.

We can obtain the p-adic expansion for any rational number  by the following algorithm. Let the p-adic expansion we want to find be

by the following algorithm. Let the p-adic expansion we want to find be

where all fractions are always given in their lowest terms. We deduce that the p-adic expansion of  is then

is then

so, since the right hand side equals  mod

mod  , we can compute

, we can compute  as

as

(mod

(mod  )

)

Next, we see that

We deduce that the p-adic expansion of  is then

is then

so, since the right hand side equals  mod

mod  , we can compute

, we can compute  as

as

(mod

(mod  )

)

We continue this process until the repeating pattern in the coefficients is spotted.

For example, suppose we specify the prime to be  and consider the rational number

and consider the rational number  . The p-adic valuation of this rational number is

. The p-adic valuation of this rational number is  since we can write

since we can write

Therefore we expect the p-adic expansion for  to have

to have  in its first term. Following the steps of the algorithm we compute the first coefficient as

in its first term. Following the steps of the algorithm we compute the first coefficient as

(mod

(mod  )

)

Then we have

so we can compute the second coefficient as

(mod

(mod  )

)

Then we have

so we can compute the third coefficient as

(mod

(mod  )

)

Then we have

so we see immediately that the fourth coefficient will be the same as the second, and the pattern will repeat from this point onwards. Therefore we have obtained the p-adic expansion of  as

as

It can be shown that the set of all p-adic expansions is an algebraic field. This is called the field of p-adic numbers and is usually denoted by  in the literature. In the rest of this note I will explore some aspects of the construction of the field

in the literature. In the rest of this note I will explore some aspects of the construction of the field  by analogy with the way Cantor constructed the field of real numbers from the field of rationals. The next section reviews Cantor’s construction of the reals.

by analogy with the way Cantor constructed the field of real numbers from the field of rationals. The next section reviews Cantor’s construction of the reals.

- Cantor’s construction of the real number field

In Cantor’s construction of the real numbers from the rationals, we regard the latter as a metric space  where the metric

where the metric  is defined in terms of the ordinary Euclidean absolute value function:

is defined in terms of the ordinary Euclidean absolute value function:

The central problem in constructing the real number field  from the field of rationals

from the field of rationals  is that of defining irrational numbers only in terms of rationals. This can be done in alternative ways, e.g., using Dedekind cuts, but Cantor’s approach achieves it using the concept of a Cauchy sequence. A Cauchy sequence in a metric space is a sequence of points which become arbitrarily `close’ to each other with respect to the metric, as we move further and further out in the sequence. More formally, in the context of the metric space

is that of defining irrational numbers only in terms of rationals. This can be done in alternative ways, e.g., using Dedekind cuts, but Cantor’s approach achieves it using the concept of a Cauchy sequence. A Cauchy sequence in a metric space is a sequence of points which become arbitrarily `close’ to each other with respect to the metric, as we move further and further out in the sequence. More formally, in the context of the metric space  , a sequence

, a sequence  of rationals is a Cauchy sequence if for each

of rationals is a Cauchy sequence if for each  (where

(where  ) there is an

) there is an  such that

such that

for all

for all

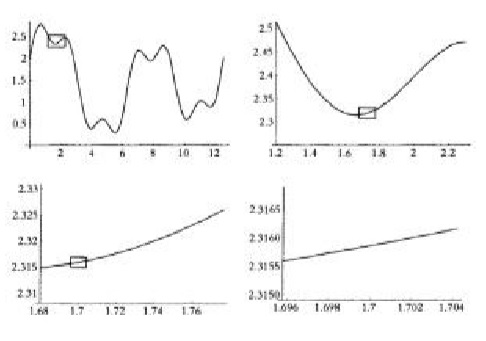

For example, if  , then we can be sure that there is a certain point in the Cauchy sequence

, then we can be sure that there is a certain point in the Cauchy sequence  beyond which all the terms of the sequence will always be within a millionth of each other in absolute value. If instead we set

beyond which all the terms of the sequence will always be within a millionth of each other in absolute value. If instead we set  , we might have to go further out in the sequence, but we can still be sure that beyond a certain point all the terms of the sequence from then on will be within a billionth of each other in absolute value. And so on.

, we might have to go further out in the sequence, but we can still be sure that beyond a certain point all the terms of the sequence from then on will be within a billionth of each other in absolute value. And so on.

Cantor’s approach to constructing the reals is based on the idea that any irrational number can be regarded as the limit of Cauchy sequences of rationals, so we can actually define the irrationals as sets of Cauchy sequences of rationals.

To illustrate, consider the irrational number  . Define three sequences of rationals

. Define three sequences of rationals  ,

,  ,

,  as follows:

as follows:

for all

for all  ,

,

if

if  , otherwise

, otherwise  ,

,

if

if  , otherwise

, otherwise  .

.

For each  ,

,  lies between

lies between  and

and  , and at each iteration the closed interval

, and at each iteration the closed interval ![[a_n, b_n]](https://s0.wp.com/latex.php?latex=%5Ba_n%2C+b_n%5D&bg=ffffff&fg=111111&s=0&c=20201002) has length

has length

(To see this, note that from the definitions of the three sequences above we find that

so we get the result from this by a simple induction). Also, each closed interval ![[a_n, b_n]](https://s0.wp.com/latex.php?latex=%5Ba_n%2C+b_n%5D&bg=ffffff&fg=111111&s=0&c=20201002) contains

contains  . Therefore the closed interval

. Therefore the closed interval ![[a_n, b_n]](https://s0.wp.com/latex.php?latex=%5Ba_n%2C+b_n%5D&bg=ffffff&fg=111111&s=0&c=20201002) is increasingly ‘closing in’ around

is increasingly ‘closing in’ around  , i.e., we have

, i.e., we have

![[a_{n+1}, b_{n+1}] \subseteq [a_n, b_n]](https://s0.wp.com/latex.php?latex=%5Ba_%7Bn%2B1%7D%2C+b_%7Bn%2B1%7D%5D+%5Csubseteq+%5Ba_n%2C+b_n%5D&bg=ffffff&fg=111111&s=0&c=20201002)

So for each of the sequences  ,

,  ,

,  of rationals, the terms of the sequence are getting closer and closer to each other, and closer to

of rationals, the terms of the sequence are getting closer and closer to each other, and closer to  . Cantor’s idea was basically to define an irrational such as

. Cantor’s idea was basically to define an irrational such as  to be the set containing all Cauchy sequences like

to be the set containing all Cauchy sequences like  ,

,  , and

, and  which converge to that irrational.

which converge to that irrational.

Formally, the process involves defining equivalence classes of Cauchy sequences in the metric space  , so that two Cauchy sequences

, so that two Cauchy sequences  and

and  belong to the same equivalence class, denoted

belong to the same equivalence class, denoted  , if for each

, if for each  (where

(where  ) there is an

) there is an  such that

such that

for all

for all

It is straightforward to show that  is an equivalence relation in the sense that it is reflexive (i.e.

is an equivalence relation in the sense that it is reflexive (i.e.  for all Cauchy sequences

for all Cauchy sequences  ), symmetric (i.e., if

), symmetric (i.e., if  then

then  for all Cauchy sequences

for all Cauchy sequences  and

and  ), and transitive (i.e., if

), and transitive (i.e., if  and

and  then

then  for all Cauchy sequences

for all Cauchy sequences  ,

,  and

and  ).

).

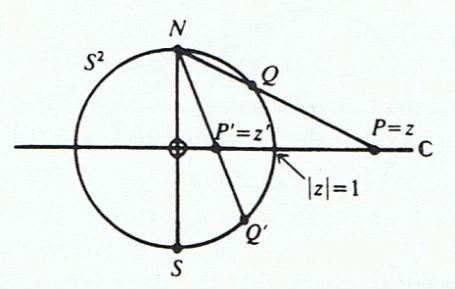

Cantor defined a real number to be any equivalence class arising from  , i.e., any set of the form

, i.e., any set of the form

where  is a Cauchy sequence in the metric space

is a Cauchy sequence in the metric space  . Rational numbers are, of course, subsumed in this since any rational number

. Rational numbers are, of course, subsumed in this since any rational number  belongs to the (constant) Cauchy sequence

belongs to the (constant) Cauchy sequence  defined by

defined by  for all

for all  .

.

It is now possible to define all the standard relations and arithmetic operations on the real numbers constructed in this way, and it can also be shown that the set of reals constructed in this way is isomorphic to the set of reals defined by alternative means, such as Dedekind cuts.

The set of reals constructed in this way can be regarded as the completion of the set of rationals in the sense that it is obtained by adding to the set of rationals all the limits of all possible Cauchy sequences in  which are irrational. In general, a metric space is said to be complete if every Cauchy sequence in that metric space converges to a point within that metric space. Clearly, therefore, the metric space

which are irrational. In general, a metric space is said to be complete if every Cauchy sequence in that metric space converges to a point within that metric space. Clearly, therefore, the metric space  is not complete since, for example, we found Cauchy sequences of rationals above which converge to

is not complete since, for example, we found Cauchy sequences of rationals above which converge to  . However, it is a basic result of elementary real analysis that the metric space

. However, it is a basic result of elementary real analysis that the metric space  is complete. It is also a basic result that the completion of a field gives another field, so since

is complete. It is also a basic result that the completion of a field gives another field, so since  is a field it must also be the case that

is a field it must also be the case that  is a field.

is a field.

- Archimedian vs. non-archimedian absolute values and ultrametric spaces

In constructing the p-adic number field it becomes important to distinguish between two types of absolute value function on a field, namely archimedian and non-archimedian absolute values. All absolute values on a field by definition have the properties that they assign the value 0 only to the field element 0, they assign the positive value  to each non-zero field element

to each non-zero field element  , and they satisfy

, and they satisfy  and the usual triangle inequality

and the usual triangle inequality

The usual Euclidean absolute value function used above on  , of course, satisfies these conditions, and is called archimedian because it has the property that there is no limit to the size of the absolute values that can be assigned to integers. We can write this as

, of course, satisfies these conditions, and is called archimedian because it has the property that there is no limit to the size of the absolute values that can be assigned to integers. We can write this as

sup

Non-archimedian absolute values do not have this property. In addition to the basic conditions that all absolute values must satisfy, non-archimedian absolute values must also satisfy the additional condition

which is known as the ultrametric triangle inequality. It is obviously the case that the usual Euclidean absolute value function used above on  (an archimedian absolute value function) does not satisfy this ultrametric triangle inequality condition, e.g.,

(an archimedian absolute value function) does not satisfy this ultrametric triangle inequality condition, e.g.,

In fact, it follows from the ultrametric triangle inequality condition that non-archimedian absolute values of integers can never exceed  , because if the condition is to hold for the absolute value function then we can write for any integer

, because if the condition is to hold for the absolute value function then we can write for any integer  :

:

and so by induction we must have

Then if  we would have

we would have  which implies

which implies  , and so

, and so  in this case. It is not possible for

in this case. It is not possible for  to exceed

to exceed  , so in the case of non-archimedian absolute values we have

, so in the case of non-archimedian absolute values we have

sup

Any absolute value function which does not satisfy the above ultrametric triangle inequality condition is called archimedian, and these are the only two possible types of absolute values. To see that these are the only two possible types, suppose we have an absolute value function such that

sup

where  . Then there must exist an integer

. Then there must exist an integer  whose absolute value exceeds

whose absolute value exceeds  , and so

, and so  gets arbitrarily large as

gets arbitrarily large as  grows, so

grows, so  cannot be finite. The absolute value function must be archimedian in this case. Otherwise, we must have

cannot be finite. The absolute value function must be archimedian in this case. Otherwise, we must have  , but since for all absolute values it must be the case that

, but since for all absolute values it must be the case that  , it must be the case that

, it must be the case that  if

if  is finite. Thus we must have a non-archimedian absolute value in this case and there are no other possibilities.

is finite. Thus we must have a non-archimedian absolute value in this case and there are no other possibilities.

The trick in constructing the p-adic number field from the rationals is to use a certain non-archimedian absolute value function satisfying the ultrametric triangle inequality condition to define the metric over  , rather than the usual (archimedian) Euclidean absolute value function. In this regard we have the following:

, rather than the usual (archimedian) Euclidean absolute value function. In this regard we have the following:

Theorem 1. Define a metric on a field by  . Then the absolute value function in this definition is non-archimedian if and only if for all field elements

. Then the absolute value function in this definition is non-archimedian if and only if for all field elements  ,

,  ,

,  we have

we have

Proof: Suppose first that the absolute value function is non-archimedian. Applying it to the equation

gives

Conversely, suppose the given metric inequality holds. Then setting  and

and  in the metric inequality we get

in the metric inequality we get

which is equivalent to

thus proving that the absolute value function is non-archimedian.

A metric for which the inequality in Theorem 1 is true is called an ultrametric, and a space endowed with an ultrametric is called an ultrametric space. Such spaces have curious properties which have been studied extensively. In some ways, however, using a non-archimedian absolute value makes analysis much easier than in the usual archimedian case. In this regard we have the following result pertaining to Cauchy sequences with respect to a non-archimedian absolute value function, which is NOT true for archimedian absolute values:

Theorem 2. A sequence  of rational numbers is a Cauchy sequence with respect to a non-archimedian absolute value if and only if we have

of rational numbers is a Cauchy sequence with respect to a non-archimedian absolute value if and only if we have

Proof: Letting  , we have

, we have

because the absolute value is non-archimedian. We then have that if  is Cauchy then the terms get arbitrarily closer as

is Cauchy then the terms get arbitrarily closer as  so we must have

so we must have  . Conversely, if

. Conversely, if  is true, then we must also have

is true, then we must also have  for any

for any  , so the conditions of a Cauchy sequence are satisfied.

, so the conditions of a Cauchy sequence are satisfied.

It is important to note that Theorem 2 is false for archimedian absolute values. The classic counterexample involves the partial sums of the harmonic series (which is divergent in terms of Euclidean absolute values). Consider the following three partial sums in particular:

Then we have

so the condition of Theorem 2 is satisfied. However,

Therefore it is not true in this case that  for any

for any  , so the conditions of a Cauchy sequence are not satisfied here. It is only in the context of non-archimedian absolute values that this works.

, so the conditions of a Cauchy sequence are not satisfied here. It is only in the context of non-archimedian absolute values that this works.

- Constructing the p-adic number field as the completion of the rationals

To obtain the p-adic number field as the completion of the field of rationals in a way analogous to how Cantor obtained the reals from the rationals, we use an ultrametric based on a non-archimedian absolute value known as the p-adic absolute value.

For each prime  there is an associated p-adic absolute value on

there is an associated p-adic absolute value on  obtained by factoring out the powers of

obtained by factoring out the powers of  in any given rational

in any given rational  to get

to get

with

With this factorisation in hand, the p-adic absolute value of  is then defined as

is then defined as

if  , and we set

, and we set  . (As mentioned earlier, the number

. (As mentioned earlier, the number  is called the p-adic valuation of

is called the p-adic valuation of  ).

).

It is straightforward to verify that this is a non-archimedian absolute value on  . It has some surprising features. For example, unlike the usual Euclidean absolute value function on

. It has some surprising features. For example, unlike the usual Euclidean absolute value function on  which can take any non-negative value on a continuum, the p-adic absolute value function can only take values in the discrete set

which can take any non-negative value on a continuum, the p-adic absolute value function can only take values in the discrete set

For example, in the case of the  -adic absolute value we have

-adic absolute value we have

Note that the  -adic absolute value of

-adic absolute value of  is large, while that of

is large, while that of  is small.

is small.

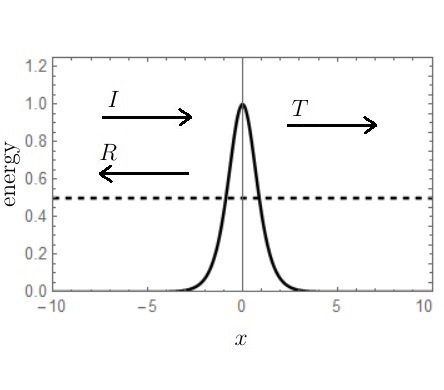

Now consider the metric space  where

where  is defined as

is defined as

By virtue of Theorem 1,  is an ultrametric and

is an ultrametric and  is an ultrametric space. Since the p-adic absolute value function has some counterintuitive features, it is not surprising that

is an ultrametric space. Since the p-adic absolute value function has some counterintuitive features, it is not surprising that  also gives some counterintuitive results. For example, the numbers

also gives some counterintuitive results. For example, the numbers  and

and  are much `closer’ to each other with regard to this ultrametric than the numbers

are much `closer’ to each other with regard to this ultrametric than the numbers  and

and  , because

, because

whereas

In addition, we can use it to show that the sequence  where

where

is Cauchy with respect to  , whereas it is violently non-Cauchy with respect to the usual Euclidean absolute value. We have

, whereas it is violently non-Cauchy with respect to the usual Euclidean absolute value. We have

It follows from Theorem 2 in the previous section that the sequence  is Cauchy with respect to the p-adic absolute value. In fact, the infinite series

is Cauchy with respect to the p-adic absolute value. In fact, the infinite series

has the sum  in the ultrametric space

in the ultrametric space  (this formula can be derived in the usual way for geometric series) but its sum is undefined in

(this formula can be derived in the usual way for geometric series) but its sum is undefined in  .

.

The following Theorem proves that the ultrametic space  is not complete in a way which is analogous to how

is not complete in a way which is analogous to how  is not complete.

is not complete.

Theorem 3. The field  of rational numbers is not complete with respect to the p-adic absolute value.

of rational numbers is not complete with respect to the p-adic absolute value.

Proof: To prove this, we will create a Cauchy sequence with respect to the p-adic absolute value function whose limit does not belong to  .

.

Let  be an integer. Recall that a property of the Euler totient function is that for any prime

be an integer. Recall that a property of the Euler totient function is that for any prime  and any integer

and any integer  we have

we have

Also recall the Euler-Fermat Theorem which says that if  then

then

(mod

(mod  )

)

With these in hand, consider the sequence  . Then since we have

. Then since we have  , the Euler-Fermat Theorem tells us that

, the Euler-Fermat Theorem tells us that

(mod

(mod  )

)

Therefore

must be divisible by  , so we have

, so we have

and so the sequence  is Cauchy with respect to the p-adic absolute value, by virtue of Theorem 2. If we call the limit of this sequence

is Cauchy with respect to the p-adic absolute value, by virtue of Theorem 2. If we call the limit of this sequence

we can write the following:

Therefore since  , the limit of the sequence must be a nontrivial

, the limit of the sequence must be a nontrivial  -th root of unity, so it cannot belong to

-th root of unity, so it cannot belong to  . This proves that the ultrametric space

. This proves that the ultrametric space  is not complete.

is not complete.

Although  is not complete with regard to the p-adic absolute value, we can construct the p-adic completion

is not complete with regard to the p-adic absolute value, we can construct the p-adic completion  in a manner analogous to Cantor’s construction of

in a manner analogous to Cantor’s construction of  as a completion of

as a completion of  . Investigating the fine details of this and the properties of

. Investigating the fine details of this and the properties of  then lead one into the rich literature on p-adic analysis, which I hope to explore further in future notes.

then lead one into the rich literature on p-adic analysis, which I hope to explore further in future notes.

this formula can be rewritten as

distribution of the increments of a Wiener process

, also commonly referred to as Brownian motion. This continuous-time stochastic process is symmetric about zero, continuous, and has stationary independent increments, i.e., the change from time

to time

, given by the random variable

, has the same

probability distribution as the change from time

to time

, given by the random variable

, and the change is also independent of the history of the process before time

.

we must also have

which is the same as the distribution of

).

and

we see that

where

and

are some parameters. Then the partial differential equation

and

). Now, in quantum mechanics a wave representation of a moving body is obtained as a wave-packet consisting of a superposition of individual plane waves of different wavelengths (or equivalently, different wave numbers

) in the form

is the Fourier transform of the

-space wavefunction

at

, i.e.,

disperses over time and it has been shown that the probability density as a function of time of such a moving body as the wave-packet disperses, given by

, always becomes Gaussian (irrespective of the original shape of the wave-packet) and has the form of the probability density function above, i.e.,

like the one above with

replaced by

and

in the above set-up).